Multimodal Multitool

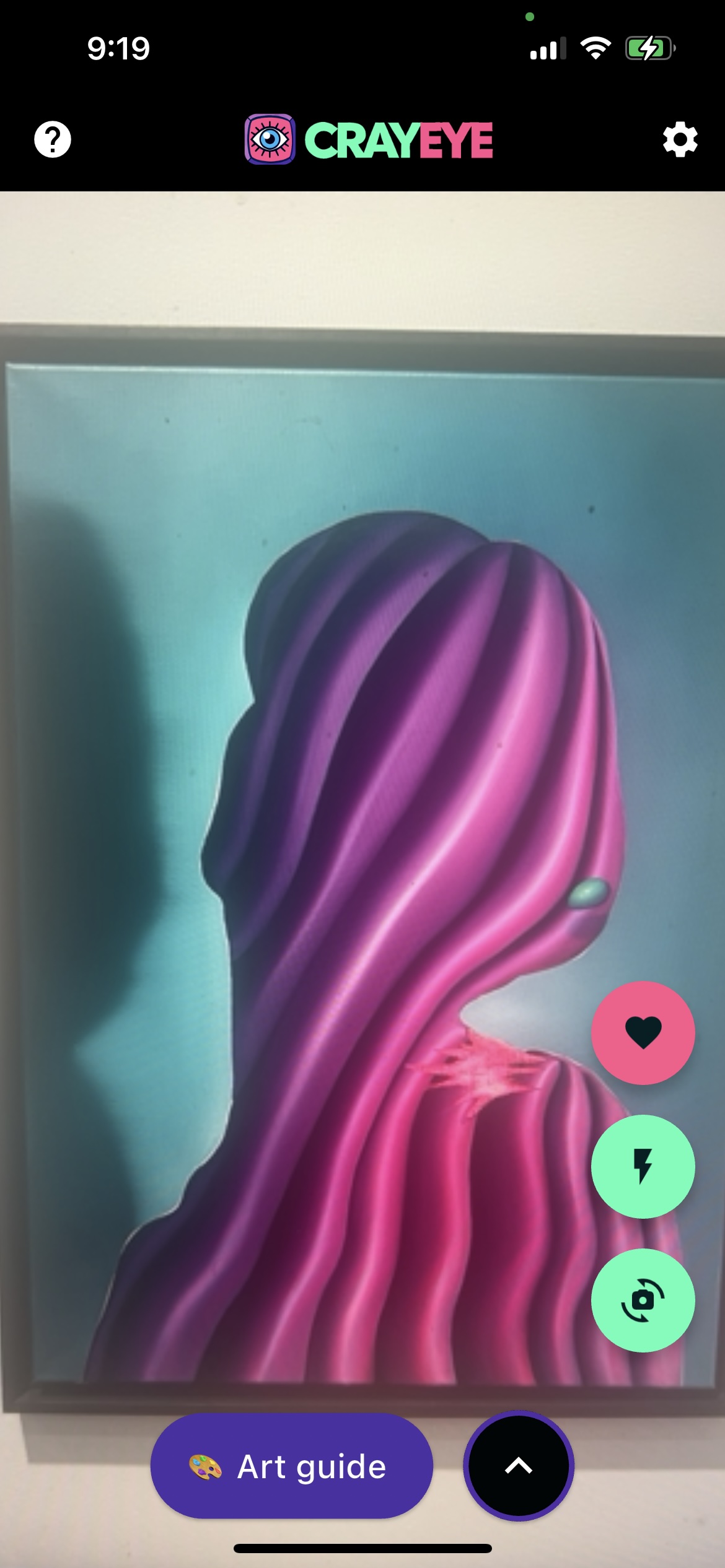

Experiment with visual multimodal models and use them to interpret your environment in new ways.

🔬 Interpret your World

Use AI to analyze your environment using your smartphone's camera

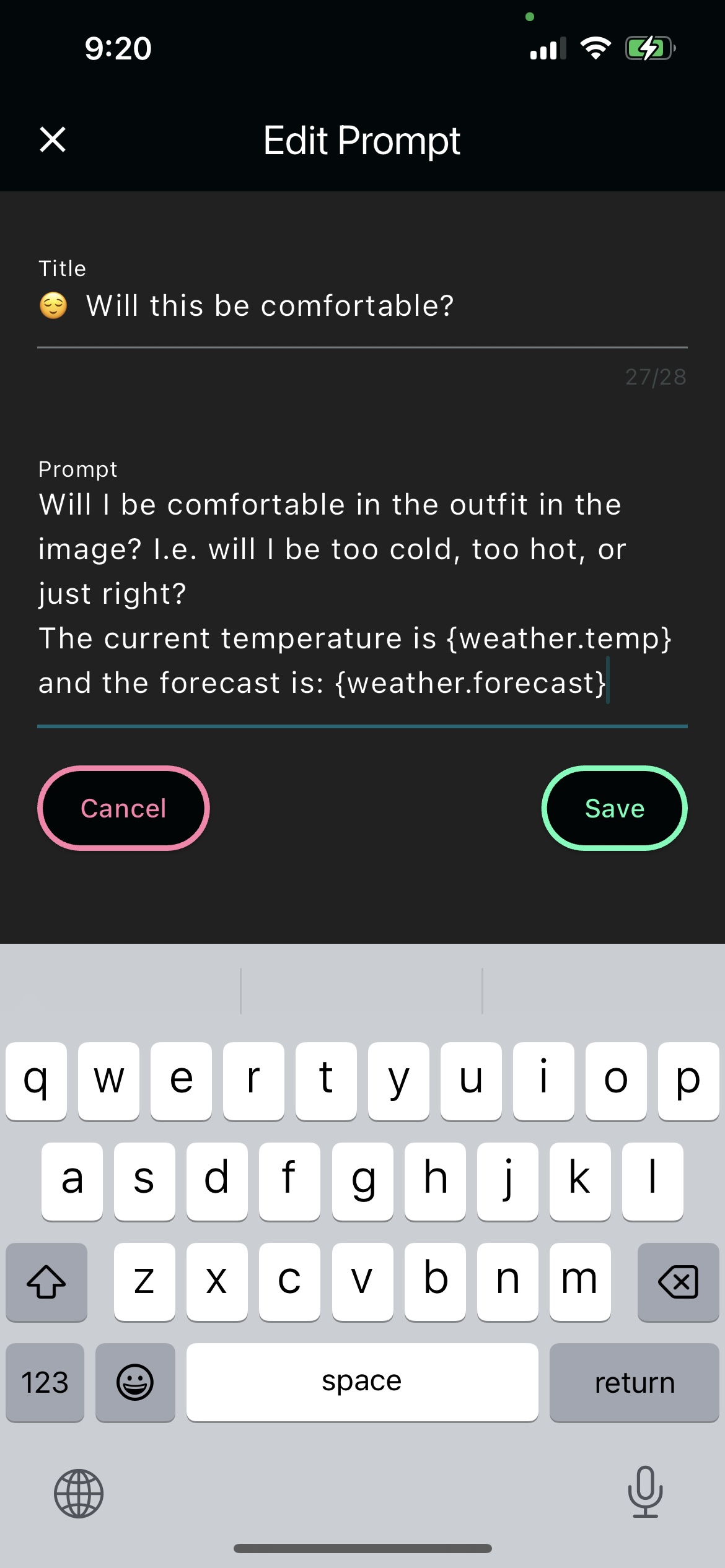

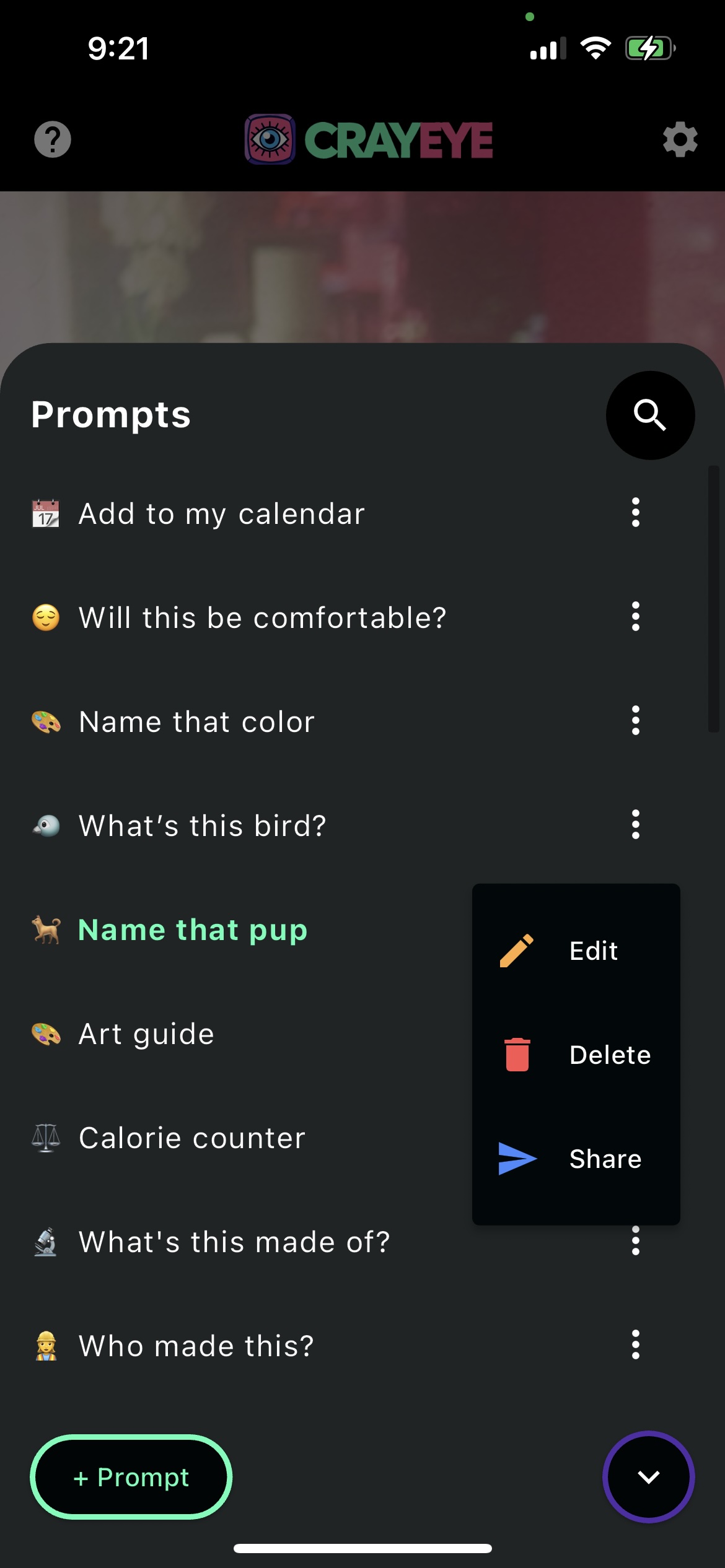

✏️ Edit Prompts

Customize prompts augmented by sensors & APIs (e.g. location, weather)

🤝 Share with Friends

Share the prompts you create/edit and add your friends' prompts

Demo Reel

Check out a selection of featured CrayEye prompts that demonstrate its capabilities:

📚 Featured PromptsCrayEye is the product of A.I. driven development. Read more about how it was created here:

🛠️ How it's Made